I tend to be skeptical of claims that AI is going to lead to big breakthroughs in medicine. Drug discovery is the usual arena for such claims, the notion being that algorithms will pick over drug datasets to open a pipeline of new therapeutics.

I’m pretty confident that AI plays will not revolutionize drug discovery. No doubt they will find a few Easter eggs that humans have overlooked. But a lot of very smart people with a lot of resources have been screening drug candidates intensively for decades now. This hunt may not have been optimally efficient, but it has been extensive and I doubt that it has missed much.

But what is true for drug discovery is not necessarily true for clinical practice. Researchers chasing drug leads can succeed by brute force and have plenty of chances to try, try again: one success pays for many failures. Clinicians treating patients with complex acute diseases have no such luxury and could use some help.

Few diseases have proven as complex and intractable to treatment as sepsis. Sepsis is the immune system going haywire in response to infection. With all its redundancies and intricate feedback loops, the immune system can malfunction in many different ways. Consequently, all attempts to develop drug treatments for sepsis have ended in tears: despite more than a hundred Phase 2 and 3 clinical trials, no drug has shown convincing evidence of efficacy. Sepsis mortality has been hanging around 20% for decades, showing little sign of improvement.

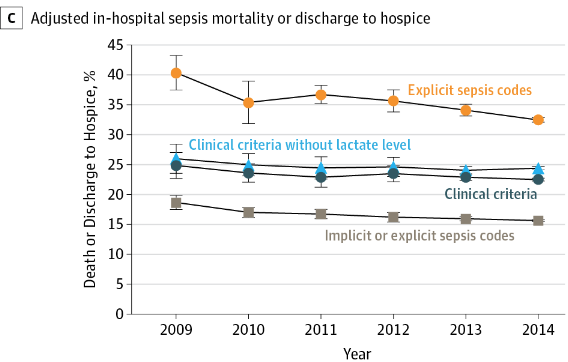

From Incidence and Trends of Sepsis in US Hospitals, 2009-2014

Therapy largely consists of timely administration of appropriate antibiotics and maintenance of blood pressure through administration of IV fluids and vasopressors. These treatments have been incorporated into a sepsis “bundle“, and Medicare payments to hospitals are based in part by their adherence to the bundle.

Current anti-hypotensive therapy regimes have not been shown to be effective however. This is why concerns over the ethics of the CLOVERS sepsis trial are misplaced – deviations from a standard of care that is not effective are not equivalent to treating patients like lab animals, as claimed.

Sepsis is a good target for AI-informed intervention: the problem is big; patients are monitored intensively so there are lots of data to analyze; the disease is heterogenous, with many comorbidities to act as confounding variables; and there is a clearly defined outcome (mortality). In short, it’s a disease that’s very hard for human brains to analyze rigorously and without falling into cognitive traps, but one that should be amenable to machine learning techniques.

That’s the setup for this paper in Nature Medicine, “The Artificial Intelligence Clinician learns optimal treatment strategies for sepsis in intensive care“. I won’t pretend to know anything about machine learning techniques, but the overall approach seems sound: input a large set of patient variables, including demographics, history, blood chemistry and physiology; combine these with actual physician anti-hypotensive therapies; correlate with outcomes on a training set and then validate on a second, independent data set.

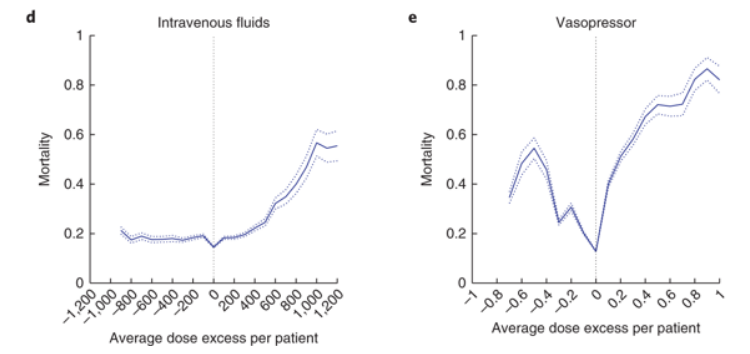

Here are the key results, graphed as mortality vs concordance between actual and AI-suggested treatment courses:

The x-axis shows the difference between actual and suggested doses of IV fluids or vasopressors. A value of zero means they were identical, and this is where mortality is at a minimum. This is no surprise, since that’s what the AI algorithm was set up to do.

The shapes of the response difference curves are informative. IV fluids given at the suggested level and below are pretty much at an optimum. But bad things start happening when more fluids are given above the recommended level, with mortality tripling at the highest levels. I doubt anyone expected that result. The likely response of most physicians when patients remain hypotensive is to keep giving fluids.

The vasopressor graph is downright frightening. There is a very narrow therapeutic window and any deviations above or below it cause steep increases in mortality. Remember, this is not a “one-size-fits-all” treatment course where one dose of vasopressors is right for all patients. That would be easy to implement, and if it existed we would already have found it without the aid of AI. This is instead a therapeutic window that is both narrow and highly conditional on patient history and physiology. No wonder effective sepsis therapy is so difficult.

Of course this is just one paper. At a minimum, I imagine physicians would want to see at least one other group with a different data set and different machine learning algorithm replicate the results before testing them out on real patients. But this paper suggests that such an effort should have high priority.

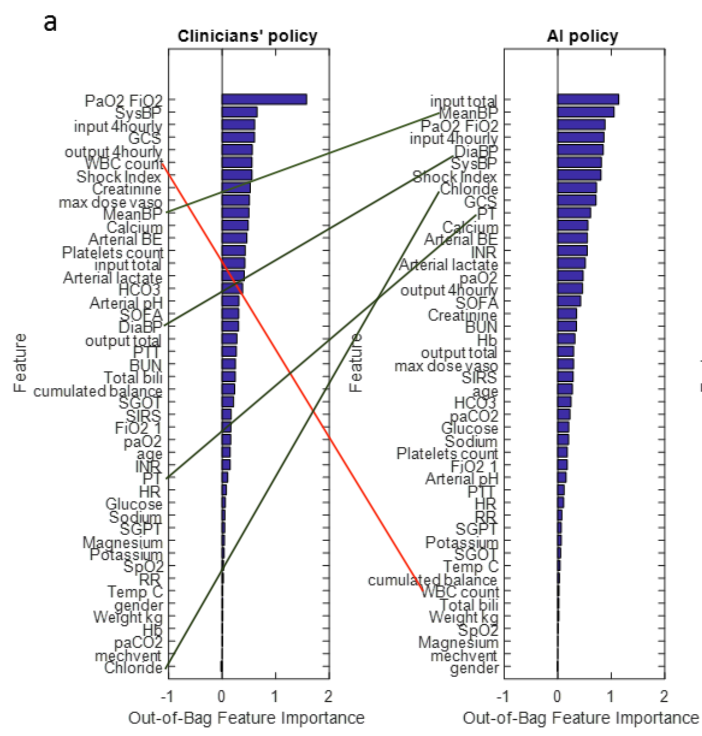

A couple other results in the supplementary data are worth noting. Here are graphs that compare the relative weight given by physicians and AI to different parameters. First, the comparison for IV fluids:

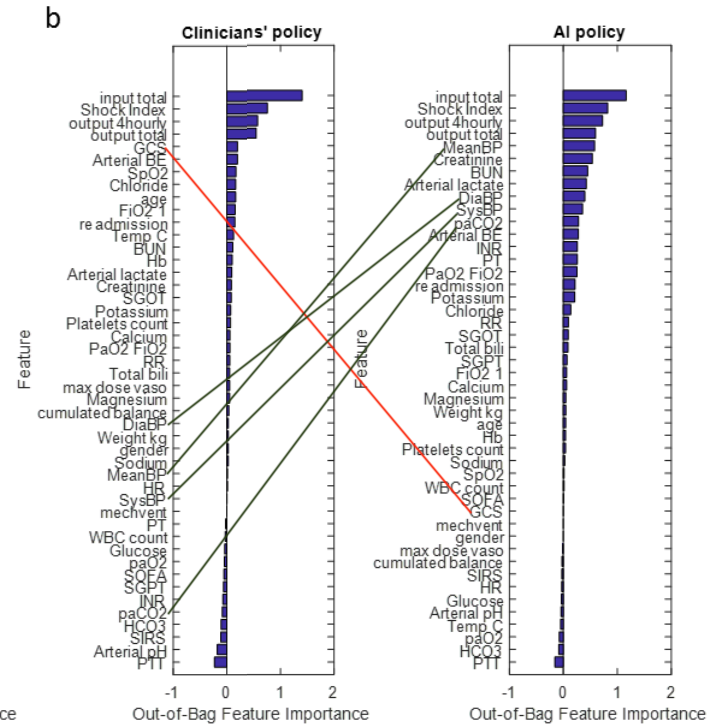

And the same comparison for vasopressors:

The first thing to note is that most of these variables are given similar ordinal weights by both experienced clinicians and AI. This is reassuring –it is implausible that clinicians have learned nothing about treating sepsis over the decades. It would instead be a sign that the AI approach is badly off track. I’ve marked up the biggest discrepancies in weighting.

But the more interesting feature of these graphs is their overall shape for clinician vs AI. Clinicians rely heavily on just a few inputs, while AI assigns importance to far more variables.

This accords with what we know about human minds. Our working memories are astonishingly feeble, as any number of studies have shown: 3-5 items are about all we can keep in mind at any one time. And our hold on these is easily broken by distractions. It’s no surprise that harried clinicians focus on a few key inputs.

Computers, of course, suffer no such frailties, and could productively employ thousands of data points in suggesting a course of treatment. They also are impervious to disruptive demands on their attention.

If indeed more than a few data points are valuable in determining optimal treatment for sepsis, then it is inevitable that AI algorithms will end up outperforming humans. It may not be the particular algorithm generated in this paper, but an optimized treatment algorithm is out there and it will be found.

This might be bad news for fans of medical dramas featuring heroic doctors, but it will be very good news for the 1.7 million Americans stricken with sepsis each year, of whom 270,000 die. Even a tiny dent in the case fatality rate would save thousands of lives — more, for instance, than personalized medicine for cancer ever will.

This paper is a very big deal, and I hope it is followed up on, and soon.

And since I haven’t seen a word about this in the popular press, I’ll tag this post as another Sci Journo Fail. They have missed a real story once again.